Table of Contents

Introduction to ViaLab (Sept. 2020 ~ )

The 4th industrial revolution,(Industry 4.0) is the ongoing automation of traditional manufacturing and industrial practices using modern smart technologies, such as Internet of things (IoT), cyber-physical systems (CPS), cloud computing, intelligent robotics, and artificial intelligence (AI). It will fundamentally change the way we live, work, and relate to each other with unprecedented scale, scope, and complexity. Fusing the last decade of our R&D experiences on smart systems with the newly emerging AI technology, our research activity in the Vehicle Intelligence and Autonomy Lab (ViaLab) focuses on the enabling technologies of unmanned autonomous vehicles, such as self-driving cars, pipeline robots, and automated guided vehicles (AGVs).

Self-driving Cars

A self-driving car is a vehicle that is capable of sensing its environment and moving safely without human intervention. One of the key challenges in self-driving car is the adoption of artificial intelligence (AI) technology to achieve human-level perception and understanding of driving environments.

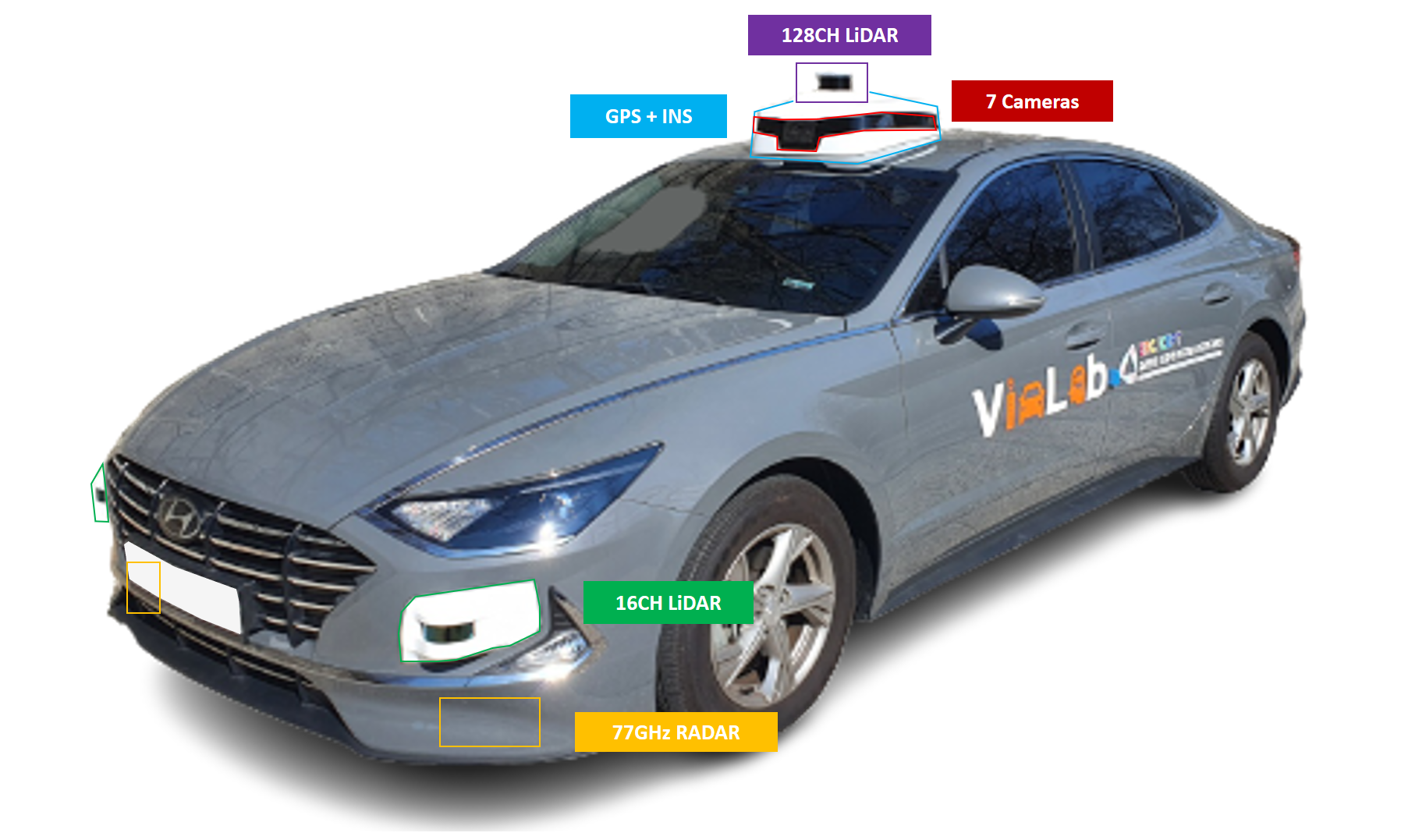

SONATA Platform

We built a self-driving vehicle platform based on Hyundai SONATA DN8. The roof sensor module consists of a 128-channel LiDAR, 6 short-range cameras covering 360 degrees, 1 long-range camera at the front side. To better detect vehicles entering from the other roads at the intersection, 16-channel LiDAR and 77 GHz radar are mounted on both front sides of the vehicle, as well. With a tactical-grade inertial navigation system (INS) for precise tracking of the vehicle trajectory, this self-driving vehicle platform can be used to construct high-precision road maps or AI datasets for autonomous driving.

Object Detection and Image Segmentation

The video below shows two different approaches to visual perception of urban road images in front of PNU main gate: object detection (left) and semantic segmentation (right). The objection detection network classifies the type of an object and localizes it using the bounding box, while the semantic segmentation network partitions an image into multiple segments each of which represents the type of object. Our object detection network is trained to directly recognize the phase of horizontal traffic light in Korean roads, which is a basic requirement of level-4 autonomous driving. The semantic segmentation network can be used to extract the drivable road area where the planning task figures out a collision-free path.

3-D Object Detection

We can also utilize deep neural networks to detect road objects based on LiDAR pointclouds. Using 128-CH Ouster LiDAR pointcloud as the input, the video (3 X speed) below shows the 3-D bounding boxes of vehicles, buses, motorcycles, and pedestrians. A self-driving vehicle can improve the accuracy and reliability of detecting road objects by the fusion of camera, LiDAR, and radar sensors.

Vehicle Control by Joystick Maneuvers

We also connect our computation server with the vehicle actuation system via X-by-wire interface so that the control signal can be converted to the adaptive cruise commands of commercial vehicle (Hyundai SONATA). The video below shows that the steering wheel of our vehicle can be controlled by the joystick commands:

Automated Valet Parking (AVP)

Automated valet parking (AVP) is one of the scenarios that will be commercialized the fastest among various self-driving scenarios. It is also a promising self-driving technology that can be applied to the automated guided vehicles (AGVs) for the automation of smart factories and logistics warehouses.

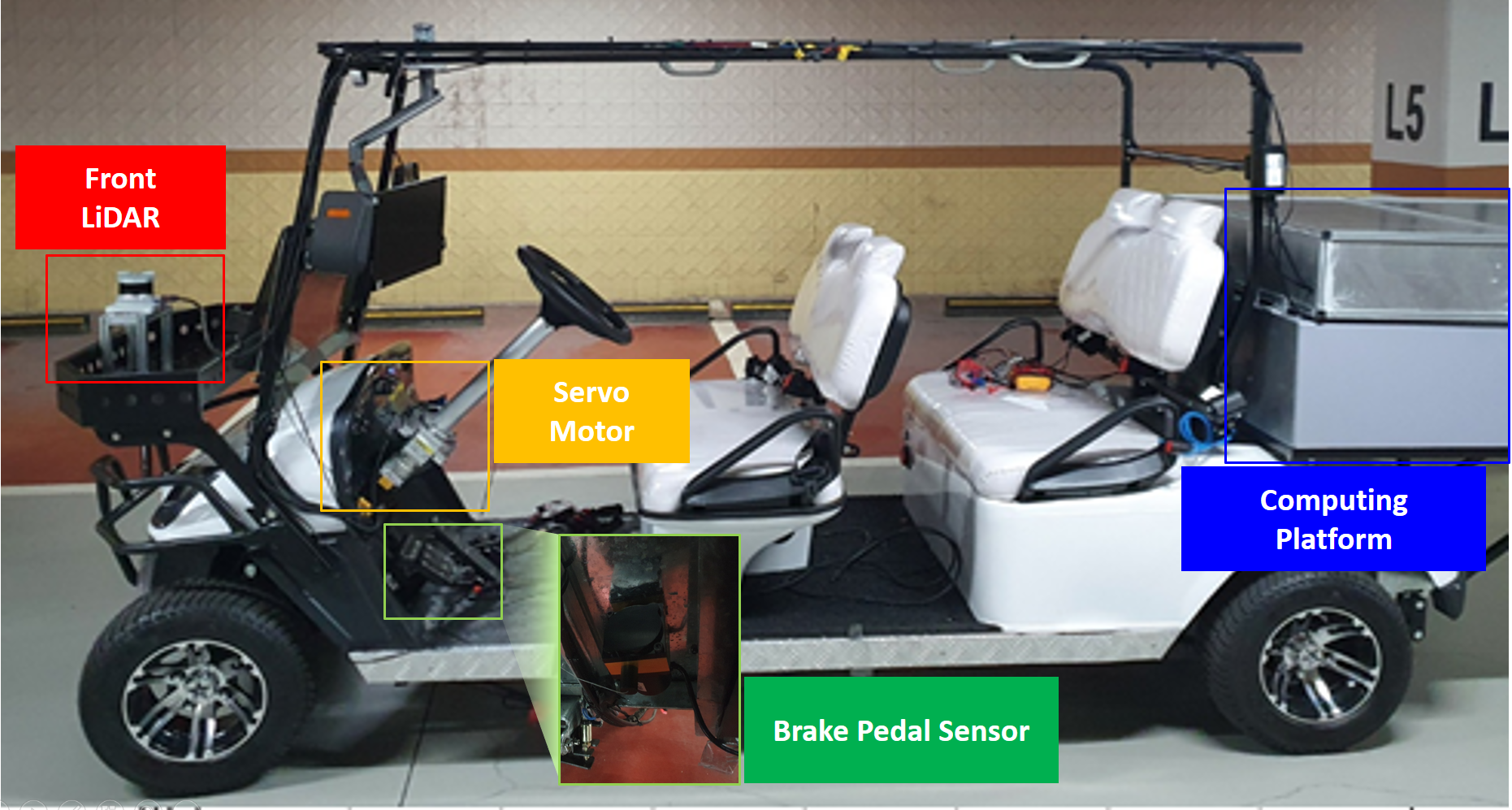

Golf Cart Platform

We built the AVP golf cart platform that consists of a sensor module, comprising of a 64-channel LiDAR, an inertial navigation system (INS), and a braking pedal sensor, an information processing module inside the trunk, and a control module for steering and acceleration controls.

Control Authority Switching

In the manual driving mode, the driver requests parking through the AVP app, and then the golf cart switches to the self-driving mode. At an emergency situation during the self-driving mode, the control authority switching system allows the driver to press the braking pedal in order to immediately react to the emergency in the manual driving mode.

SLAM for Electric Vehicle

The core technology of self-driving is high-precision vehicle positioning. To this end, the AVP golf cart utilizes the 3-D LiDAR pointclouds and the inertial navigation system (INS) measurements. The AVP golf cart constructs a map of driving environments through the scan matching between LiDAR pointclouds and 3-D high-precision map, and simultaneously detects its real-time position through the simultaneous localization and mapping (SLAM) technology.

AVP Demo at PNU Campus

AVP enables vehicles to automatically perform parking tasks without driver intervention, which includes finding a parking space within a designated area, navigating to the spot, and completing the parking maneuver within a designated parking slot.

When the driver arrives at the entrance of parking lot via manual driving, and requests valet parking service using a smartphone AVP app, the golf cart creates a shortest path from the current position to the destination parking slot. The model predictive control (MPC) module determines the speed and steering of the golf cart during the self-driving. The video below demonstrates that our AVP golf cart can park successfully through self-driving at the PNU Jangjeon campus in an exclusive traffic scenario.

AVP Demo with Mixed Traffic

In a mixed traffic scenario, our AVP golf cart accurately perceives both stationary and dynamic objects in its surrounding environment, enabling it to generate safe paths and avoid collisions in real-time. This advanced capability allows the AVP golf cart to confidently navigate and park itself in unstructured parking areas with arbitrary traffic, eliminating the need for driver intervention.

Automated Guided Vehicles Control System (ACS)

To coordinate the access of multiple AGVs to the shared resources, such as intersection, we are currently developing an open-source, platform-independent, and vendor-independent AGV control system (ACS) which has been actually deployed in a factory of Sungwoo HiTech from December 2023.

The AGV abstraction layer (AAL) of our ACS mitigates the protocol inconsistency over multi-vendor AGVs, and provide the unified protocol interface to the core modules of our ACS system. The video (2 X speed) below shows that our ACS can successfully coordinate the simultaneous access of Meidensha and Aichi CarryBee AGVs at the intersection:

ACS Deployment at Sungwoo HiTech

Our ACS has been successfully deployed at a new production line of Sungwoo HiTech's Seo-Chang factory in December 2023. The ACS efficiently manages the flow of AGVs at intersections, optimizing scheduling and dispatching through integration with Sungwoo HiTech's Manufacturing Execution System (MES).

Non-Destructive In-Line Inspection for Smart Infrastructure

To be announced soon…

Introduction to NSSLab (Sept. 2008 ~ Aug. 2020)

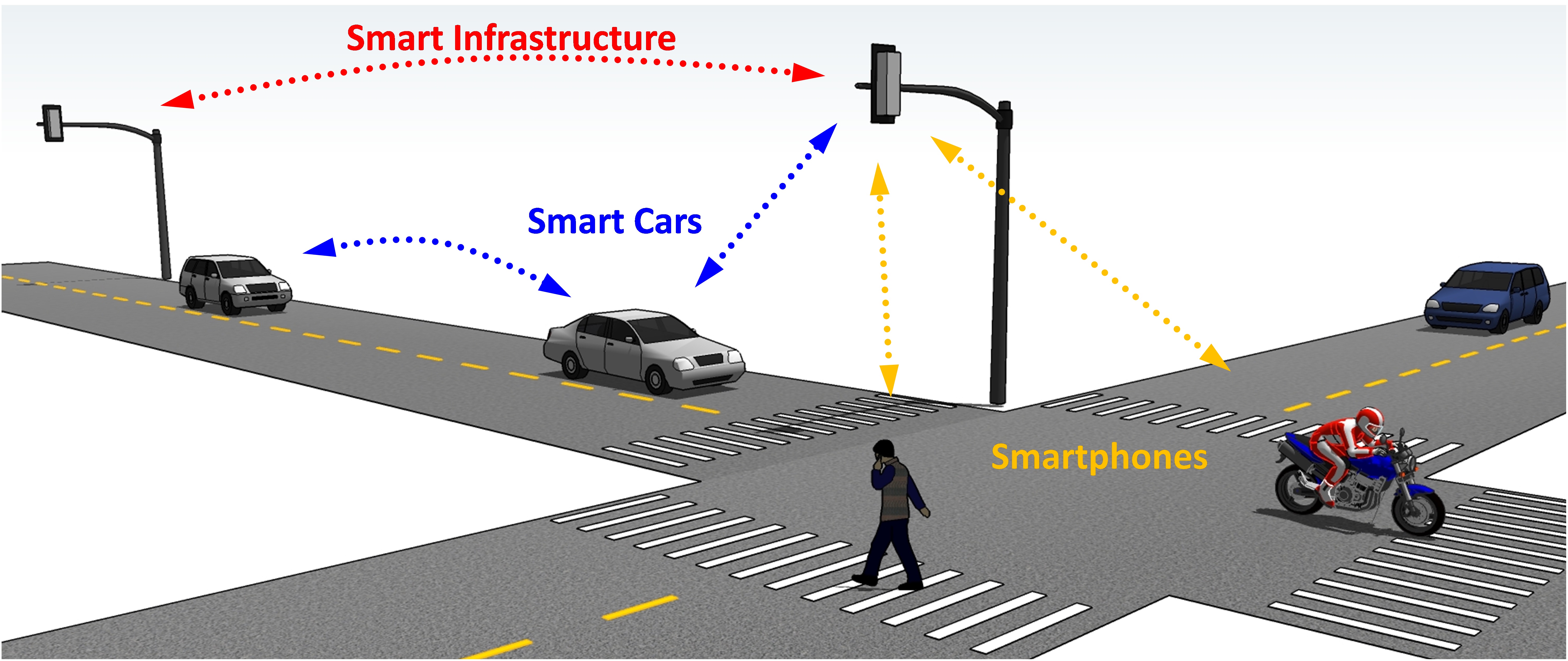

During the last a few decades, we have witnessed the Internet revolution which has significantly changed our daily life. As a result, wherever we are, whatever we do, we are always connected to the Internet through the wired/wireless network infrastructure, which can be seen as (human-centric) ubiquitous networking. However, this megatrend doesn’t have to be confined to the ubiquitous communication among between human beings. For example, in recent years, we have witnessed the explosive market growth of the SMARTPHONE which is an embedded computer system capable of accessing to the Internet via cellular or Wi-Fi networks. Another example would be a SMART-GRID system which is a new era of electric power systems via interconnection with the Internet.

We strongly believe that this revolution will continue to the networking of the SMART SYSTEMS, which can be defined as the device-centric (or machine-centric) networking. In the networked smart systems (NSS) laboratory, we attempt to study the state-of-the-art technologies to resolve many related issues in the networking of such smart systems including SMART VEHICLES and SMART NETWORK INFRASTRUCTURE.